Download: student_assessment_tracker.xlsx

Downloading: student_assessment_tracker.xlsx use this Excel template to aggregate data from your classroom assessments

You've already incorporated best practices like interleaved practice, spaced rehearsal, and retrieval practice, but how do you know your teaching is working? How do you know your students have retained content you taught them a few months ago?

Your gradebook gives you a limited, myopic view of progress made by individual students. Most grading systems are organized around the accumulation of points over time and provide little evidence of a teacher's impact on specific conceptual understandings:

"[T]he starting point for any integrated assessment system must be the formative purpose. Teachers can always aggregate fine-scale data on student achievement to provide a grade or other summary of achievement, but they cannot work out what the student needs to do next on the basis of a grade or score...For the record to serve as more than merely a justification for a final report card grade, the information that we collect on student performance must be instructionally meaningful. Knowing that a student got a B on an assignment is not instructionally meaningful. Knowing that the student understands what protons, electrons, and neutrons are but is confused about the distinction between atomic number and atomic mass is meaningful. This information tells the teacher where to begin instruction."

Clymer, J. B., & Wiliam, D. (2007). Improving the way we grade science. Educational Leadership, 64, 19.

Here's how I've implemented this advice:

- Plan backwards. Break down course content into chunks that are broad enough to be meaningful but narrow enough to be assessable. (These are known by varyingly faddish names such as "enduring understandings", "essential learning outcomes", and so forth.) Not everything I teach makes it onto this list; for example, I think it's absolutely essential that high school general chemistry students demonstrate a good conceptual understanding of chemical equilibrium, and I do teach (and quiz) equilibirum calculations, but I don't consider the quantitative aspect essential and I don't put it on tests. My course has 25 of these, each representing 1-2 weeks of instruction.

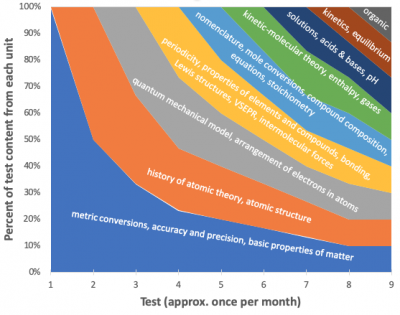

- Make good cumulative electronic assessments.It's possible to analyze results if the test is given on paper, but it's extremely unpleasant. I use a Moodle chemistry item bank I've developed for this purpose--it makes analysis a breeze. My first test has 20 questions on the metric system, accuracy and precision, and basic properties of matter. My second test has 15 questions on these and 15 questions on atomic theory and structure. My third test has 10 questions based on each of the first two tests, and 10 questions on new material, and so on through the end of the school year:

- Export scores. Include the class average for each item. If the items on the test go in the same order as the curriculum, you can just copy and paste into the attached spreadsheet. Repeat this every time you give a new test.

- Reclaim your data. This is the big payoff! Too often, "data" is just a cudgel for clueless administrators to whack teachers with (i.e. "There are lots of Fs in your class, what are you doing wrong?" "Our standardized test scores are down, that must mean our teachers are ineffective!" etc.) Now YOU can use granular, curriculum-based data to inform YOUR OWN practice.

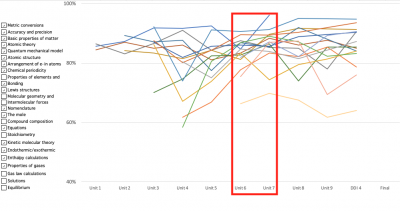

Here's an example of how I did that in a recent school year. Looking at the results of my unit 6 test, I noticed students either performed poorly on, or had backslid significantly on, a handful of particular chemistry concepts. I implemented a whole-class instructional intervention. Student performance on the next unit test improved on every one of the six concepts the intervention focused on:

Whereas the concepts not addressed by the intervention were decidedly a mixed bag:

I've used this tool to address other questions throughout the school year, including:

-Does a week of snow days negatively affect retention? (Probably, but mostly for quantitative skills, and the decline is reversible.)

-Which sub-skill is the main reason students struggle with intermolecular forces? (Probably molecular geometry)

-Are there any skills I can stop reviewing and testing because students have mastered them? (Metric conversions in April; Lewis structures by May)

What questions about your own practice would you address with this data collection model?